Setting Up Access Control for Your APIs

Authentication proves who is calling your API. Access control determines what they are allowed to do. Without a strong model, APIs either become too permissive (risking data leaks and fraud) or too restrictive (breaking integrations). Getting it right requires both the right infrastructure and the right policy model.

APIs are increasingly the backbone of integrations between organizations, and onboarding a partner safely depends on setting up reliable access control from day one. It’s not enough to expose endpoints and issue credentials — you need a layered model that defines who can call your APIs, what operations they’re permitted to perform, and how those rules evolve as your ecosystem scales.

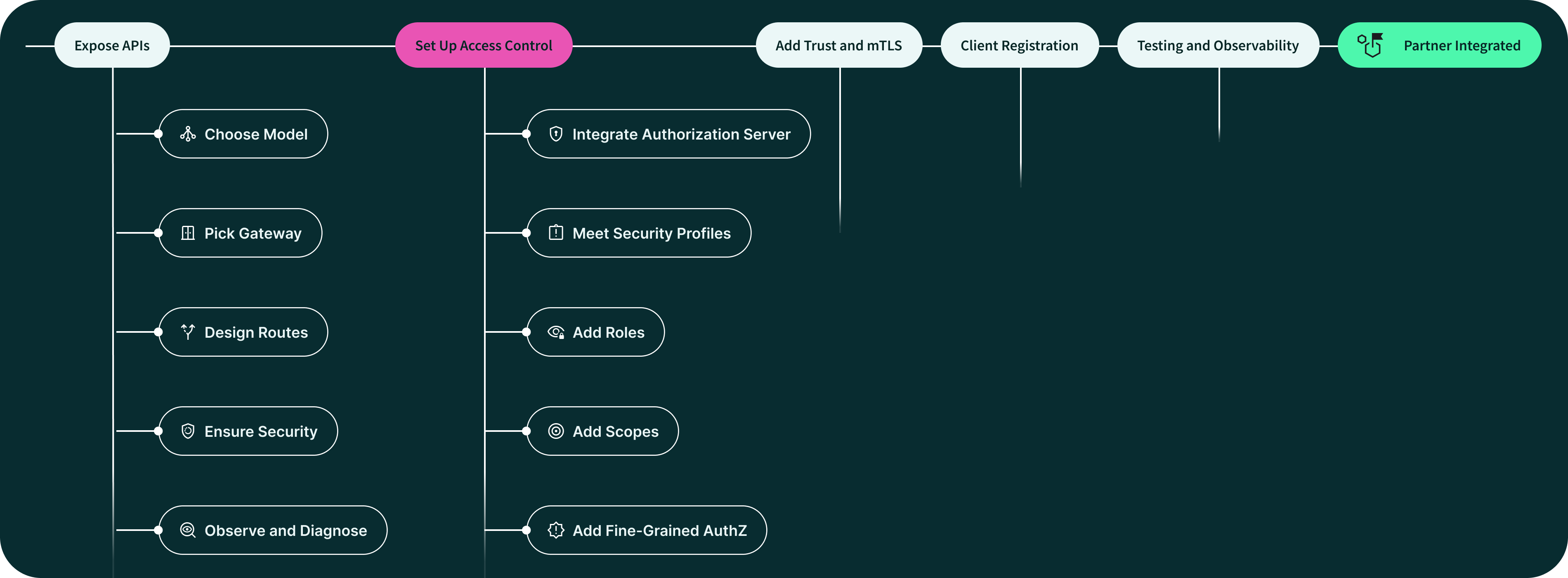

This post is part of our series “Onboarding Your First Partner in 30 Days”, where we walk through the practical steps of taking an API from concept to production-ready partner access. See the Exposing APIs the Right Way article for the first part of the series.

Linking the Gateway and Authorization Server To Control Access to APIs

Access control starts with a tight integration between your API Gateway (the policy enforcement point) and the Authorization Server (the policy decision point + token issuer). The gateway must be the first place a request is stopped if the token, binding, or claims are invalid.

Client Authentication to Authorization Server

How the client proves its identity when requesting a token determines the assurance you can place on that token.

- mTLS (mutual TLS) — client presents a certificate during the TLS handshake. The AS validates the certificate chain and (optionally) maps the cert to a client id. Use when you need high assurance and easy revocation via cert rotation/CRLs. mTLS is a first-class method in FAPI.

-

private_key_jwt (JWT client assertion) — client signs a JWT with its private key and sends it to the token endpoint (

client_assertion). The AS validates the signature against a registered JWK. This is PKI-based but done in-band over HTTPS. -

client_secret_basic / client_secret_post — simple shared-secret authentication (OK for low-assurance M2M/testing/PoCs; avoid for high-sensitivity APIs).

Example of a JWT client assertion payload (sent as client_assertion):

{

"iss": "client-123",

"sub": "client-123",

"aud": "https://auth.example.com/oauth/token",

"jti": "unique-id-1",

"iat": 1690000000,

"exp": 1690000300

}

The AS verifies signature, iss/sub, aud == token endpoint, exp, and jti

uniqueness.

Token Formats — JWT vs Opaque Tokens (And Hybrid Patterns)

Two common token formats have different trade-offs:

-

JWT (self-contained)

-

Gateway can validate locally: signature,

iss,aud,exp,nbf, and application claims (scp/scope,azp,jti, customroles). -

Pros: low latency, no AS call per request.

-

Cons: revocation is hard; key rotation must be handled carefully.

-

-

Opaque tokens + Introspection RFC 7662

-

Gateway calls the AS introspection endpoint to learn

active,scope,client_id,exp, and custom attributes. -

Pros: immediate revocation, central policy changes reflected instantly.

-

Cons: additional latency and AS availability dependence.

-

Hybrid: validate JWT signature locally for performance, but call

introspection on suspicious tokens (or periodically) or when token binding/cnf

claims need server-side verification.

Example Introspection (curl)

curl -u client-id:client-secret \

-X POST https://auth.example.com/oauth2/introspect \

-d "token=opaque-token-value" \

-d "token_type_hint=access_token"

Sample introspection response:

{

"active": true,

"scope": "inventory:read metrics:publish",

"client_id": "svc-123",

"exp": 1735729200,

"iat": 1690000000

}

What Gateways Must Validate (JWT And Opaque)

When the gateway accepts a token it should, at minimum, validate:

-

iss— token was issued by your AS. -

aud— includes the API or gateway resource identifier (reject tokens without the expected audience). -

exp/nbf/iat— respect expiry and allow small clock skew (e.g., 60s). -

kid/ signature — verify signature using the JWKS entry matchingkid. -

scopeorscp— ensure required scope(s) are present for the endpoint. -

azp— if present andaudcontains multiple targets, ensureazpmatches the legitimate client. -

cnf— a confirmation claim used for bound tokens (mTLS, DPoP). If present, validate the proof (see below). -

jti— optional but useful for logging and possible replay detection.

Example (pseudo) check in gateway code:

const payload = jwtVerify(token, jwk); // verify signature

if (payload.iss !== EXPECTED_ISS) throw 401;

if (!payload.aud.includes(API_AUDIENCE)) throw 403;

if (!payload.scope.split(' ').includes('inventory:read')) throw 403;

if (payload.exp < now - 60) throw 401; // allow 60s clock skew

Token Binding and Proof-of-Possession

Bearer tokens are easy to steal and replay. To prevent this, access tokens can be bound to a key or certificate so that only the legitimate holder can use them. Two practical options are mTLS and DPoP.

mTLS-Bound Tokens

In mutual TLS, the client presents a certificate when requesting a token. The

authorization server issues a token with a cnf claim containing the

certificate fingerprint. When the client later calls the API, the gateway checks

that the TLS session uses the same certificate. A stolen token is useless

without the matching private key. This model is operationally heavier because it

requires certificate lifecycle management, but it delivers the strongest binding

and is mandatory in profiles like FAPI.

DPoP

DPoP avoids client certificates by binding tokens to a public key instead. The

client proves possession of the key by attaching a signed DPoP proof JWT to each

API request. The gateway verifies that the proof matches the request URI and

method, and that the token’s cnf claim refers to the same key. Unlike mTLS,

DPoP is lightweight and works in browsers or mobile apps, but it shifts

complexity to request-level validation and replay protection.

JWKS, Key Rotation and Caching

When validating JWTs locally you rely on the AS’s jwks_uri. Implement these

best practices:

-

Fetch the JWKS on startup and cache it. Use

kidto select the correct key. -

Observe

Cache-Control/Expiresheaders; refresh proactively before keys expire. -

Implement a fallback: if

kidis unknown, fetch JWKS immediately and retry verification once. -

Support key rollover: AS should publish a new key alongside old signing keys for a transition window.

-

Don’t assume infinite cache; apply a TTL that balances load and rotation requirements.

Revocation, Lifetimes, And Design Trade-Offs

-

Short-lived tokens (e.g., minutes–hours) reduce window for abuse and minimize need for revocation. For JWTs, this is essential because you can’t reliably revoke a signed token unless you add state.

-

Revocation endpoints (RFC 7009) work for opaque tokens — the AS can mark tokens revoked, and the gateway will find out on its next introspection or via a revocation cache.

-

Token exchange (RFC 8693) is useful when a caller should receive a downgraded token for a less-trusted downstream service (avoid passing a high-privilege token around).

Gateway Enforcement Patterns

There are three common enforcement patterns — choose based on security and performance needs:

-

Local JWT validation only — fastest, simplest. Use when immediate revocation is not required and keys are well managed.

-

Introspection (centralized) — gateways call the AS for each token (or cache introspection). Use when you need immediate revocation control and central policy enforcement.

-

Hybrid — validate signature locally, but consult the AS for tokens with special claims, for tokens about to expire, or when

jti/revocation checks are needed.

You can also push policy decisions to a PDP (policy decision point) such as OPA: the gateway forwards request context + token claims to OPA and enforces the allow/deny and obligations returned by OPA. That lets you keep authorization logic out of the gateway and express complex rules (time windows, tenant ownership, rate thresholds) centrally.

Example OPA call payload:

{

"input": {

"method": "POST",

"path": "/inventory",

"client_id": "svc-123",

"claims": { "scope": "inventory:write", "roles": ["ingest"] }

}

}

Resilience, Latency and Operational Concerns

Caching

Both JWKS documents and introspection responses should be cached to keep latency low and reduce load on the authorization server. Caches must respect the exp claim in tokens so that expired credentials are never accepted, and TTLs should be conservative enough to allow for key rollover and revocation.

Authorization Server Availability

The gateway’s behavior when it cannot reach the authorization server is critical. For sensitive APIs, the system should fail closed and deny requests until validation is restored. In lower-risk cases, it may be acceptable to rely on cached validation data, but this must be a deliberate trade-off, not an accident.

Circuit Breakers

Because token introspection adds a dependency on the authorization server, circuit-breaker patterns should be in place. The gateway should detect repeated failures, trip the breaker, and serve controlled responses while alerting operators. Monitoring latency and error rates of introspection calls helps catch issues early.

Clock Synchronization

JWT validation depends on accurate time checks for claims like exp, nbf, and iat. Even a small drift can cause valid tokens to be rejected or expired tokens to be accepted. All gateway nodes should use NTP or another synchronization service to ensure clocks stay tightly aligned.

Logging, metrics, and audit trail

Log sufficient data for forensics without leaking secrets:

jti,client_id,scope,iss,aud,decision(allow/deny),policy_id(if PDP used), request path, and timestamp.- Capture AS call latencies and introspection error rates as SRE/SEC signals.

- Keep logs tamper-resistant and retained per policy for audits.

Meeting Security Profiles (FAPI and Beyond)

Even outside regulated industries, aligning to security profiles raises your baseline. The most prominent is the Financial-grade API (FAPI) profile from the OpenID Foundation, which enforces:

- mTLS or private_key_jwt for client authentication.

- Pushed Authorization Requests (PAR) to avoid leaking sensitive params in front channels.

- JARM (JWT-secured responses) to prevent tampering.

- Strict scope and claim validation, preventing token misuse.

- End-to-end auditability, with signed logs of all auth flows.

Implementing FAPI ensures that access to sensitive APIs is governed by best-in-class patterns. Even if you aren’t a bank, adopting its requirements helps achieve Zero Trust by default.

Roles for Machines, Not Humans

With infrastructure in place, the next step is defining who your clients are.

In machine-to-machine (M2M) contexts, clients aren’t people but service

identities. Each workload — cart-service, payment-processor,

metrics-publisher — should authenticate with its own credentials and be tied

to an explicit role.

A role is a contract between a client and the system: these are the only operations this client can perform. The mistake many teams make is reusing a single generic client identity (for example, “partner-app”) across multiple integrations. That blurs responsibility and makes least-privilege impossible.

In containerized platforms, the model is obvious. A Kubernetes ServiceAccount

can be bound to a Role with narrow verbs and resources:

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

namespace: reports

name: report-reader

rules:

- apiGroups: [""]

resources: ["jobs"]

verbs: ["get", "list"]

The same principle applies at the API layer. If the metrics-publisher client

only ever writes monitoring data, its access token should not contain rights for

user deletion or inventory queries. Instead, its registered role might be

represented in the token itself:

{

"client_id": "metrics-publisher",

"roles": ["metrics-writer"]

}

The gateway (or an external PDP like OPA) evaluates whether the role is allowed to call a given endpoint. That mapping should live outside application code — in policy files or gateway configuration — to avoid drift.

For higher assurance, roles can be coupled with attestation of the environment: for instance, issuing a token only if the workload presents a signed SPIFFE/SPIRE ID or a workload identity from a trusted orchestrator. That way, you know not only the declared role, but also the runtime context in which it runs.

Scopes as Coarse-Grained Guards for API Access

Scopes are the first line of authorization: broad entitlements that describe what a client is generally allowed to do. They are coarse-grained by design — intended to gate classes of operations before finer policy is applied.

A typical access token might carry multiple scopes:

{

"scope": "inventory:read metrics:publish"

}

When the client calls:

GET /inventory/123

Authorization: Bearer eyJhbGciOi...

the gateway checks that inventory:read is present before routing to the

backend. If not, it immediately returns 403 Forbidden. This keeps the backend

API free of repetitive scope checks and ensures enforcement is consistent across

services.

The hard part is scope design. Scopes should be domain-oriented and

stable, not ad hoc. A name like api:v2:orders:write communicates both the

API domain and the version, making it easier to track usage across multiple

services. If you later evolve the API, introducing api:v3:orders:write gives a

clear upgrade path without ambiguity.

Scopes also benefit audits and monitoring. Because they are predictable, logs and metrics can be aggregated to show which scopes are exercised most and which clients consume them. This visibility is lost if you rely on arbitrary, service-specific permissions.

Finally, scopes compose well with fine-grained claims. For example, a token

might assert inventory:read but also include an account_id claim. The scope

opens the door, and downstream policy uses the additional claim to restrict

which inventory records can be read.

Combining Roles and Scopes for API Access Control

Roles and scopes serve different purposes but reinforce each other when applied consistently. Roles answer the question who is this client, and what class of actor are they? Scopes answer what operations has this client been authorized to perform in this request?

Consider a metrics-publisher client. Its role is metrics-writer, which

identifies it as a service whose sole purpose is to push telemetry. That role

determines which scopes it is allowed to request — for example,

metrics:publish. When the client authenticates, the authorization server

issues a token containing only the scopes that its role permits.

This creates a chain of control:

- Role-to-scope mapping defines which scopes each client role may hold.

- Scopes in the token act as coarse guards checked by the gateway.

- Backend services remain scope-agnostic and trust the gateway to enforce.

Example token for the metrics-publisher:

{

"client_id": "metrics-publisher",

"roles": ["metrics-writer"],

"scope": "metrics:publish"

}

When the gateway receives a call, it checks that the scope matches the

endpoint being requested. If the client attempted to call an unrelated API (say

DELETE /users/123), the request would fail because the scope is absent, even

though the token is otherwise valid.

This layered model prevents privilege creep. Roles keep service identities distinct and scoped to their purpose, while scopes ensure tokens are never overbroad. Together, they create a predictable enforcement surface where authorization logic is centralized and auditable.

Fine-Grained Authorization with RAR

Scopes alone are binary: you either have orders:write or you don’t. For

sensitive APIs, that isn’t enough. OAuth Rich Authorization Requests (RAR)

allow clients to request access with contextual detail.

Example request:

{

"authorization_details": [

{

"type": "data_access",

"actions": ["read"],

"locations": ["https://api.example.com/resources/abc"],

"limit": { "records": 100 }

}

]

}

Here the client is not asking for blanket data:read, but to read 100 records

from a specific dataset. The AS evaluates this against policy and issues a

token bound to just that action.

RAR enables contextual, auditable access decisions — crucial in domains where volume, scope, or sensitivity of data must be tightly controlled.

→ Interested in RAR? Read a dedicated blog post about Enabling Fine-Grained Consent with OAuth Rich Authorization RequestsAuthorization Policies for API Access Control

Access control in modern APIs is more than just roles and scopes. Policies define the rules for what a client can do under specific conditions, and risk signals influence decisions dynamically. A robust model considers who, what, where, when, and how:

-

Who — the client identity, roles, and credentials.

-

What — requested operation, endpoint, or resource.

-

Where — client IP, geolocation, network, or environment attestation.

-

When — temporal constraints, e.g., business hours, or token age.

-

How — method of request (mTLS, DPoP, JWT vs opaque) or device posture.

Policy Decision Points (PDP) in Practice

Policy logic is extracted from application code and centralized in a PDP. This ensures:

-

Consistent enforcement across multiple gateways or microservices.

-

Auditable decisions with inputs, context, and output logged.

-

Context-aware decisions, e.g., rejecting a write if the source IP is outside a trusted range or the client hasn’t performed a recent attestation.

Example OPA policy considering risk:

package api.inventory

default allow = false

allow {

input.method == "GET"

input.path == "/inventory/" + item

input.claims.scope == "inventory:read"

input.claims.tenant_id == data.items[item].tenant_id

input.risk_score < 50

}

risk_score := score {

score := 0

input.client_geo != "EU" # add points if outside expected region

input.request_time < "08:00" # early hours

}

Here, risk_score adjusts access dynamically, integrating environmental signals (geo, time, device posture, previous suspicious behavior) into authorization.

Obligations and Enforcement

Policies can return obligations beyond allow/deny, which the gateway or microservice enforces:

-

Redaction / filtering — hide sensitive fields unless explicitly allowed.

-

Rate limiting — enforce per-client or per-scope limits dynamically.

-

Step-up authentication / attestation — request additional proofs if risk is high.

-

Logging / auditing — automatically include request context and PDP inputs in secure audit logs.

Obligations make authorization actionable and allow centralized policies to propagate consistent enforcement across services.

Dynamic Scope and Role Mapping

Roles and scopes remain a fast, coarse-grained guard:

-

Roles → Scopes mapping controls what a client can request from the AS.

-

Scopes in tokens are checked immediately at the gateway.

-

PDP policies refine authorization based on context, risk, or additional claims (tenant, account, transaction limits).

This layered approach ensures defense-in-depth, where a single compromised token or misconfigured scope cannot bypass runtime enforcement.

Operationalizing Access Control

Access control isn’t a static configuration; it’s a living, operational system. Once roles, scopes, and tokens are defined, the system must continuously enforce, monitor, and adapt access decisions.

Centralized Policy Enforcement

Rather than scattering authorization logic across microservices, use a Policy Decision Point (PDP) such as OPA or Cedar. Gateways forward requests, client claims, and context to the PDP, which evaluates policies and returns allow/deny decisions and obligations:

-

Obligations instruct the gateway or backend to take additional actions, like:

- Redacting sensitive fields.

- Rate limiting per scope or client.

- Triggering step-up authentication for high-risk operations.

- Logging enriched context for audit trails.

Centralizing policies ensures consistent behavior across multiple APIs, reduces drift, and makes updates auditable.

Risk-Aware Authorization

Modern APIs operate in dynamic, multi-tenant environments where static roles and scopes aren’t enough. Incorporate risk signals into authorization decisions:

- Client posture — workload identity, TLS cipher strength, certificate validity.

- Request context — geolocation, IP, time-of-day, device or environment attestation.

- Behavioral anomalies — sudden spike in requests, unusual endpoints, abnormal payload sizes.

- Transaction limits — enforce RAR-style maximums or per-client throttling.

A PDP can evaluate these signals alongside roles and scopes to allow, deny, or trigger additional steps dynamically. This approach enables fine-grained, context-sensitive security without complicating backend services.

Logging, Metrics, and Audit Trails

Operational maturity depends on observability:

- Log every decision with

client_id,roles,scopes,risk_score,policy_id, endpoint, and timestamp. - Capture latency and error metrics for token validation, introspection, and PDP calls.

- Ensure logs are tamper-resistant, centrally collected, and retained per compliance requirements.

- Use these logs to detect anomalies, perform audits, and refine policies over time.

Token and Credential Management

- Use short-lived tokens and certificates, rotated automatically.

- Support revocation endpoints for opaque tokens, and proactive key rotation for JWTs.

- Correlate PDP decisions with token and certificate lifecycle to detect expired or compromised credentials.

Resilience and Availability

Gateways and PDPs introduce dependencies. Build for reliability:

- Caching JWKS / introspection carefully — balance latency, revocation, and key rotation.

- Circuit breakers for PDP or introspection endpoints to prevent cascading failures.

- Fail-closed defaults for sensitive APIs to ensure security when the PDP is unreachable.

- Maintain clock synchronization across nodes to avoid JWT validation errors.

Putting It All Together

A robust access control architecture for APIs combines policy, context, and operational rigor. Here’s the layered model in practice:

-

Gateway ↔ Authorization Server integration — token validation and binding form the enforcement foundation.

-

Machine roles — define service identities and their broad responsibilities.

-

Scopes — act as coarse-grained gates for API endpoints.

-

Centralized policies — evaluate roles, scopes, risk signals, and obligations dynamically.

-

Risk signals and context-aware decisions — ensure authorization is adaptive to client posture, environment, and transaction details.

-

Fine-grained controls (RAR or claims) — enforce contextual access, limits, or redaction.

-

Operational observability — logs, metrics, and audit trails allow continuous monitoring, anomaly detection, and compliance.

When executed correctly, this architecture ensures that:

-

Privilege creep is prevented — roles and scopes remain tightly controlled.

-

Sensitive operations are risk-aware — access is evaluated dynamically, not statically.

-

Backends remain simple — all policy enforcement is centralized or delegated to gateways/PDPs.

-

Auditable enforcement exists — all decisions are logged, traceable, and verifiable.

In short, APIs are no longer just endpoints; they become enforceable contracts, resilient, auditable, and dynamically controlled at scale.

Conclusion

Access control isn’t just a checkbox; it’s the backbone of secure, resilient, and auditable APIs. A robust model combines machine roles, scopes, centralized policies, risk signals, and fine-grained authorization to enforce least privilege across your ecosystem. Every token, every request, and every decision should be verifiable, context-aware, and auditable — ensuring that privilege creep is prevented and sensitive operations remain protected. By integrating gateways, authorization servers, and PDPs, and operationalizing observability, caching, revocation, and resiliency, APIs become more than endpoints: they become enforceable contracts, dynamically controlled and secure by design.

Practical Guide

Onboard Your First Partner in 30 Days - No Fluff, Just Code

Get the practical developer playbook with weekly steps, real configs, and copy-ready code to build, secure, and distribute your APIs fast.

Access Now →